|

|

- Search

| Clin Exp Reprod Med > Volume 51(1); 2024 > Article |

|

Abstract

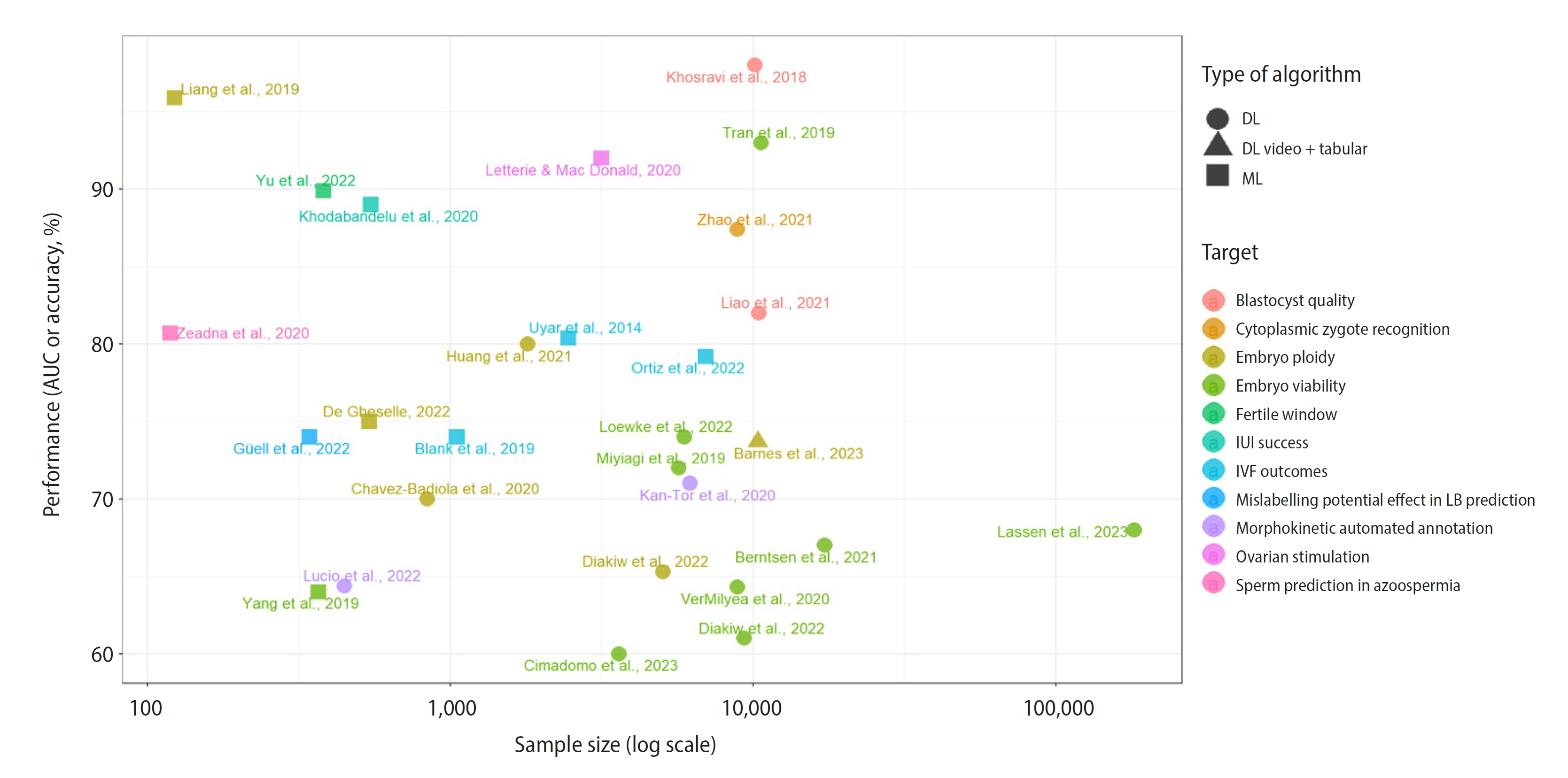

Figure 1.

Table 1.

| Study | Target | No. of features input | Dataset | Type of input | Results | Limitations |

|---|---|---|---|---|---|---|

| Uyar et al. (2015) [6] | IVF outcomes (implantation) | 11 | 2,453 | Patient metadata and embryo morphological characteristics | Accuracy=80.4%; Sensitivity=63.7% | Retrospective study, embryo transfers performed at cleavage stage (D+2/3), manual assessment, imbalanced dataset, LB not used as an endpoint, lack of multi-center evaluation. |

| Blank et al. (2019) [7] | IVF outcomes (implantation) | 32 | 1,052 | Patient metadata | AUC=0.74; Sensitivity=0.84; Specificity=0.58 | Retrospective study, limited sample size, lack of external validation, balanced training set but imbalanced testing set, LB not used as an endpoint. |

| Goyal et al. (2020) [37] | IVF outcomes (LB) | 25 | 141,160 | Patient metadata | AUC=0.846; Recall=76%; Precision=77% | Retrospective study, imbalanced dataset adjusted by downsampling, limited generalizability due to specific population data, limited factors in the dataset. |

| Ortiz et al. (2022) [28] | IVF cycle outcomes (mosaicism/aneuploidy) | 29 | 6,989 | Patient metadata classified into six groups: general, maternal, paternal, couple-related, IVF cycle-related, and embryo-related | AUC_aneuploidy=0.792; AUC_mosaicism=0.776 | Retrospective study, biased dataset as only PGT-A embryos included, imbalanced dataset, lack of external validation. |

| Zeadna et al. (2020) [10] | Sperm prediction in azoospermia | 14 | 119 | Patient metadata (hormonal levels, age, body mass index, histopathology, varicocele, etc.) | AUC=0.807 | Retrospective study, limited generalizability due to population heterogeneity of non-obstructive azoospermia patients, limited sample size, only TESE used, imbalanced dataset, lack of multi-center evaluation. |

| Guell Penas et al. (2022) [36] | Mislabelling potential effect in LB prediction | 4 | 343 | Morphokinetic parameters | AUC_aneuploid=0.74; AUC_KIDn=0.59 | Retrospective study, PGT-A on D+3, no mosaicism considered, limited sample size, lack of multi-center evaluation, manually kinetic parameters. |

| Yang et al. (2019) [17] | Embryo viability (implantation, LB) | 5 | 367 | Morphokinetic parameters | AUC_implantation=0.69; AUC_live birth=0.64 | Conventional IVF and ICSI embryo morphokinetic parameters merged without adjusting t0 for conventional IVF sperm lagging or using intervals, imbalanced dataset, limited sample size, lack of multi-center evaluation, potential mislabelled embryos in non-implanted group, retrospective study. |

| Liang et al. (2019) [18] | Embryo ploidy status | 1 | 123 embryos (1,107 Raman spectra) | Raman spectra | Accuracy=95.9% | Retrospective study, limited sample size, LB not used as an endpoint, lack of multi-center evaluation. |

| De Gheselle et al. (2022) [27] | Embryo ploidy status | 85 | 539 | Morphokinetic parameters, standard development features and patient metadata | AUC=0.75; Accuracy=71% | Retrospective study, limited sample size, LB not used as an endpoint, lack of multi-center evaluation. |

| Yu et al. (2022) [29] | Fertile window | 2 | 382 | Basal body temperature and heart rate | AUC=0.899; Accuracy=87.5%, Sensitivity=69.3%; Specificity=92% | Retrospective study, lower predictions for irregular menstruators (AUC=0.725), lack of multi-center evaluation. |

| Khodabandelu et al. (2022) [31] | IUI success | 8 | 546 | Patient metadata, sperm sample data, antral follicle counting | Gmean, AUC, Brier values of 0.80, 0.89, and 0.129, respectively | Retrospective study, imbalanced dataset, lack of multi-center evaluation. |

| Letterie et al. (2020) [32] | Ovarian stimulation day-to-day decision-making tool | 4 | 3,159 | Stimulation control data (oestradiol level, follicle measurement, cycle day and recombinant FSH dose) | Accuracy_continue=0.92; Accuracy_trigger=0.96; Accuracy_dosage=0.82; Accuracy_days=0.87 | Retrospective study, lack of multi-center evaluation, manual assessment of ultrasound observations, imbalanced dataset. |

| Hariton et al. (2021) [33] | Ovarian stimulation trigger decision-making tool | 12 | 7,866 | Number of follicles 16–20 mm in diameter, the number of follicles 11–15 mm in diameter, and oestradiol level, age, body mass Index, protocol type | Average outcome improvement in total 2PNs and usable blastocysts compared with the physician's decision | Retrospective study, long period (>10 years). |

| Fanton et al. (2022) [34] | Gonadotrophin starting dose | 4 | 18,591 | Age, body mass index, anti-Müllerian hormone, antral follicle count | Mean absolute error of 3.79 MII; r2 for MII prediction=0.45 | Retrospective study, model based on U.S. population, dismissed confounding factors such as dose adjustments and timing of trigger, exclusion of cycles with missing data. |

| Correa et al. (2022) [35] | Ovarian stimulation first FSH dosage | 5 | 3,487 | Age, body mass index, anti-Müllerian hormone, antral follicle count, previous LB | Model's score approaches best possible dose more times than clinicians. | Retrospective study, tendency of the model to overdose some patients due to underrepresentation of hyper-responder, limited generalizability due to specific population data, lack of multi-center evaluation. |

The main limitations, results, and sample size are presented in this table.

IVF, in vitro fertilization; LB, live birth; AUC, area under the curve; PGT-A, preimplantational genetic testing for aneuploidy; TESE, testicular sperm extraction; KIDn, negative known implantation embryos (non-implanted embryos); ICSI, intracytoplasmic sperm injection; FSH, follicle-stimulating hormone; IUI, intrauterine insemination; PN, pronucleate; MII, metaphase II oocyte.

Table 2.

| Study | Target | Dataset | Type of input | Results | Limitations |

|---|---|---|---|---|---|

| Mendizabal-Ruiz et al. (2022) [9] | Sperm selection (fertilization and blastocyst formation) | 383 | Video | Software's scores related with fertilization (p=0.004) and blastocyst formation (p=0.013) | Retrospective study, limited sample size, lack of standardized protocols for imaging, lack of multi-center evaluation. |

| Nayot et al. (2021) [11] | Oocyte quality and blastocyst development | 16,373 | Single image | SRs related with blastocyst rate: (0–2.5)=16%; (2.6–5)=36.9%; (5.1–7.5)=44.2%; (7.6–10)=53.4% | Retrospective study, male factor was not taken into consideration, lack of standardized protocols for imaging, limited generalizability due to specific population data, LB not used as an endpoint. |

| Zhao et al. (2021) [12] | Cytoplasmic zygote recognition | 8,877 | Single image | AUC=0.874 | Retrospective study, limited sample size, requirement of high-quality images and standardization protocols for imaging, lack of external validation. |

| Kan-Tor et al. (2020) [13] | Morphokinetic automated annotation (blastocyst, implantation) | 6,200 blastocyst, 5,500 implantation | Video | AUC_blastocyst=0.83; AUC_implantation=0.71 | Retrospective study, not enough information about imbalancing assessment, LB not used as an endpoint, potential mislabelled embryos in non-implanted group. |

| Feyeux et al. (2020) [14] | Morphokinetic automated annotation | 701 | Video | Manual vs. automated annotation concordance, r2=0.92 | Retrospective study, lack of multi-center evaluation, only one focal plane. |

| Lucio et al. (2022) [15] | Morphokinetic automated annotation (ploidy, implantation) | 448 | Video | Concordance correlation coefficient ranging from tPNf=0.813 to tSB=0.947; AUC_plastocyst=0.814; AUC_ploidy=0.644 | Retrospective study, imbalanced dataset, LB not used as an endpoint, mosaic score not significantly predictive, potential mislabelled embryos in non-implanted group. |

| Khosravi et al. (2018) [16] | Blastocyst quality | 10,148 | Single image | AUC=0.98; accuracy=96.94%; IR Good-Morph and <37 years=66.3%; IR Poor-Morph and ≥41 years=13.8% | Retrospective study, lack of external validation, possibly limited sample size, LB not used as an endpoint. |

| Liao et al. (2021) [8] | Blastocyst stage and quality | 10,432 | Video | AUC=0.82; accuracy=78.2% | Retrospective study, lack of multi-center evaluation, clinical characteristics not taken into account, only one focal plane of 3D embryos, LB not used as an endpoint. |

| VerMilyea et al. (2020) [42] | Embryo viability (blastocyst, implantation) | 8,886 | Single image | Accuracy=64.3%; sensitivity=70.1%; specificity=60.5%; AI improvement vs. embryologists' accuracy=24.7% | Retrospective study, model only trained on day 5 transferred embryos, LB not used as an endpoint, funded by commercial companies, potential mislabelled embryos in non-implanted group. |

| Tran et al. (2019) [41] | Embryo viability (implantation) | 10,638 | Video | AUC=0.93 | Retrospective study, imbalanced dataset, arrested embryos included, random prediction for not arrested embryos, limited sample size, LB not used as an endpoint, funded by commercial companies, potential mislabelled embryos in non-implanted group. |

| Diakiw et al. (2022) [21] | Embryo viability (implantation) | 9,359 | Single image | AUC=0.61; accuracy=61.8% | Retrospective study, use of simulated cohort ranking analyses, LB not used as an endpoint. |

| Berntsen et al. (2022) [23] | Embryo viability (implantation) | 17,249 | Video | AUC_implantation=0.67; AUC_all=0.95 | Retrospective study, imbalanced dataset, LB not used as an endpoint, funded by commercial companies, potential mislabelled embryos in non-implanted group. |

| Loewke et al. (2022) [40] | Embryo viability (implantation) | 5,923 | Single image | AUC=0.74. Score difference of >0.1 related to higher pregnancy rates. | Retrospective study, limited sample size, lack of standardized protocols for imaging. |

| Theilgaard Lassen et al. (2023) [24] | Embryo viability (implantation) | 181,428 | Video | AUC_blind=0.68; AUC_D+5=0.707; AUC_D+3=0.621; AUC_D+2=0.669; AUC_all=0.954 | Retrospective study, imbalanced dataset, upsampling may increase sample bias, AUC_all included arrested embryos, funded by commercial companies, potential mislabelled embryos in non-implanted group. |

| Miyagi et al. (2019) [38] | Embryo viability (LB) | 5,691 | Single image | Comparison AI vs. conventional embryo evaluation: 0.64/0.61, 0.71/0.70, 0.78/0.77, 0.81/0.83, 0.88/0.94, and 0.72/0.74 for the age categories <35, 35–37, 38–39, 40–41, and ≥42 years and all ages, respectively. | Retrospective study, imbalanced dataset, limited sample size, lack of standardized protocols for imaging, lack of multi-center evaluation, potential mislabelled embryos in non-implanted group. |

| Cimadomo et al. (2023) [43] | Embryo viability (implantation, LB) | 3,604 | Video | AUC_euploid=0.6; AUC_LB=0.66 | Retrospective study, imbalanced dataset, potential mislabelled embryos in non-implanted group. |

| Diakiw et al. (2022) [20] | Embryo ploidy status | 5,050 | Video | Accuracy=65.3%; sensitivity=74.6%; Accuracy_cleanseda)=77.4% | Retrospective study, LB not used as an endpoint, lack of standardized protocols for imaging, possibly limited sample size. |

| Huang et al. (2021) [39] | Embryo ploidy status | 1,803 | Patient metadata and video | AUC=0.8 | Retrospective study, limited sample size, imbalanced dataset, manually kinetic parameters, lack of multi-center evaluation, model based only on PGT-A patients, LB not used as an endpoint. |

| Chavez-Badiola et al. (2020) [19] | Embryo ploidy status and viability (implantation) | 840 | Single image | Accuracy=70%; PPV=79%, NPV=66%. Higher ranking metric (NDCGs) than random selection | Retrospective study, imbalanced dataset, lack of multi-center evaluation, limited sample size, LB not used as an endpoint, potential mislabelled embryos in non-implanted group. |

| Barnes et al. (2023) [22] | Embryo ploidy status | 10,378 | Patient metadata, video, morphokinetics, embryo grading | AUC=0.737, accuracy=65.7%, aneuploid predictive value=82.3% | Retrospective study, biased dataset as only PGT-A embryos included, lack of standardized protocols for imaging, manually annotated morphokinetics and morphological assessments, differences in mosaic reporting across different genetic laboratories, mosaicism was not considered during model development, LB not used as an endpoint. |

| Cimadomo et al. (2022) [26] | Blastocoel collapse and its relationship with degeneration and aneuploidy | 2,348 | Video | Degeneration and aneuploidy rates directly related to number of collapses. | Retrospective study, limited sample size, mosaicism could not be reliably assessed, no differences in LBR. |

The main limitations, results, and sample size are presented in this table.

SR, score range; LB, live birth; AUC, area under the curve; tPNf, time of pronuclear fading; tSB, time of starting blastulation; IR, implantation rate; 3D, three-dimensional; AI, artificial intelligence; PGT-A, preimplantational genetic testing for aneuploidy; PPV, positive predictive value; NPV, negative predictive value; NDCG, normalised discontinued cumulative gain.

References

- TOOLS

-

METRICS

-

- 1 Web of Science

- 0 Crossref

- Scopus

- 1,663 View

- 127 Download

- Related articles in Clin Exp Reprod Med